Most music doesn’t start with sound. It starts with intention—an emotion you want to express, a short line of text, a scene you can picture but can’t yet hum. For years, that early stage was fragile because turning words into audio required technical commitment before you knew whether the idea was worth it. That’s why workflows built around Text to Song AI feel fundamentally different. They let language act as a creative trigger, not just a rough note you’ll “translate later.”

What struck me when using this approach wasn’t just speed. It was timing. You get to hear an idea while it’s still flexible, before you’ve emotionally invested in one version of it. That alone changes how creative decisions unfold.

The Usual Bottleneck: You Must Choose Before You Understand

Traditional music creation pushes you into decisions early:

- tempo before emotion is clear

- instrumentation before the mood is proven

- structure before you know whether the hook deserves it

None of these choices are wrong—but making them too soon creates friction. If the idea turns out weak, you’ve already spent energy defending it. Many half-finished tracks die this way, not because they’re bad, but because the cost of finding out was too high.

A language-first workflow lowers that cost.

A New Creative Order: Meaning → Sound → Structure

Instead of building from mechanics upward, the process reverses the flow:

- Meaning – What should this feel like?

- Sound – How does that meaning translate sonically?

- Structure – How should it unfold over time?

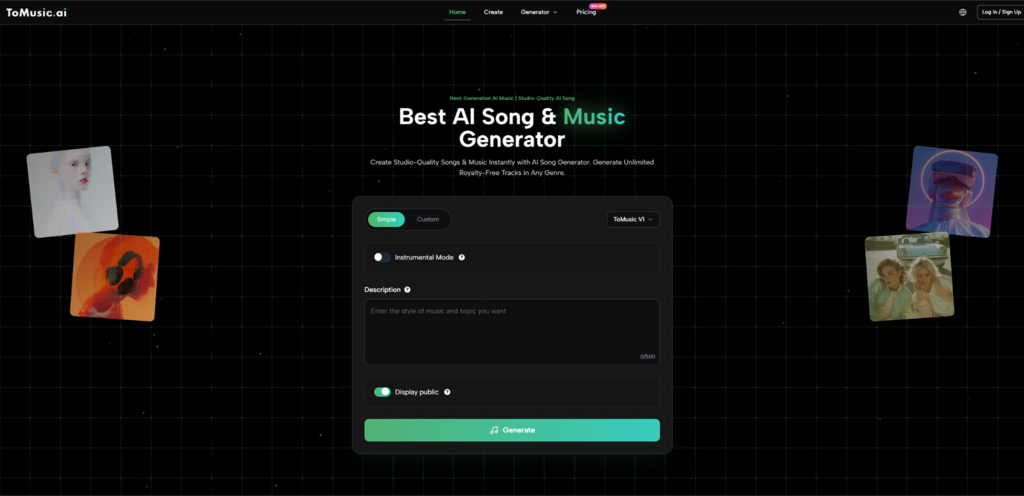

This order mirrors how listeners experience music. They don’t hear plugins or chord theory; they hear feeling, movement, and contrast. ToMusic.ai aligns the creation process more closely with that reality.

What Changes When Words Become the Interface

Working this way introduces a few subtle but powerful shifts.

You evaluate earlier

When you hear a full draft quickly, your brain switches from imagining to judging. You’re no longer asking “Could this work?”—you’re asking “Does this work?”

You commit later

Because generation is fast, you’re less tempted to lock in the first decent result. That delay in commitment leads to better selection, not indecision.

Language becomes a control surface

In my testing, small changes in phrasing—adding a sense of progression, contrast, or restraint—often led to more noticeable improvements than adding technical detail.

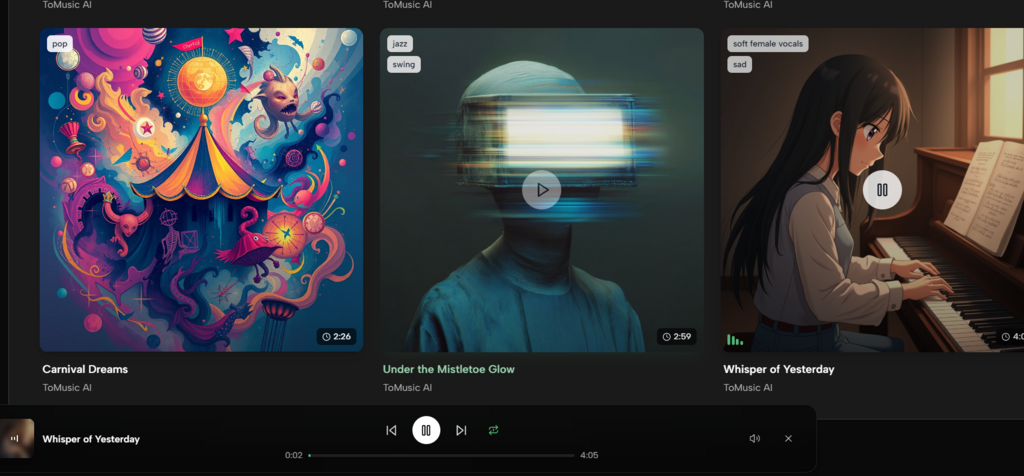

From Words to Performance: Where Lyrics Enter

This workflow becomes especially revealing when lyrics are involved. A Lyrics to Song process doesn’t just generate a song—it exposes your writing.

When lyrics are sung back to you, a few things happen instantly:

- lines that read well but sing poorly stand out

- syllable overload becomes obvious

- emotional peaks (or lack of them) are impossible to ignore

I found this feedback loop invaluable, even when I didn’t keep the generated version. Hearing lyrics performed—even imperfectly—made rewriting faster and more honest.

How the System Feels to Use (Beyond “Features”)

It’s more useful to think in terms of roles than tools.

Your role

- articulate intent clearly

- listen critically

- adjust language, not knobs

- decide what resonates

The system’s role

- interpret text as musical probability

- propose melody, rhythm, and pacing

- offer a concrete version of an abstract idea

Each generation feels like a suggestion, not a solution. The question becomes: Is this closer or farther from what I meant?

Iteration as a Creative Advantage

One of the biggest changes I noticed was how iteration affected judgment.

When iteration is slow, creators defend their work.

When iteration is fast, creators compare.

That comparison sharpens taste. Over multiple generations, patterns emerge:

- which emotional arcs feel convincing

- where energy drops unintentionally

- how much structure is “enough”

Instead of guessing, you learn by listening.

Language-First vs Traditional Production

| Dimension | Language-first workflow | Traditional production |

| Entry point | Text, lyrics, emotional intent | DAW session, instruments |

| Time to first full draft | Short (from testing) | Often long |

| Early focus | Meaning and mood | Technical setup |

| Iteration cost | Low | High |

| Attachment risk | Minimal | High |

| Best stage | Ideation, validation | Refinement, polish |

These approaches aren’t competitors. They belong at different moments. ToMusic.ai excels before certainty exists.

Observations That Changed How I Prompt

After many iterations, a few principles consistently held true:

1. Describe movement, not just state

“Quiet tension that gradually opens into relief” worked better than static adjectives like “sad” or “cinematic.”

2. Fewer constraints can improve coherence

Overloaded prompts sometimes produced scattered results. Clear intent with room to interpret often sounded more natural.

3. Lyrics are stress tests

If a line doesn’t sing well, the problem is usually the line—not the model. That realization saves time.

4. Consistency requires templates

For multi-track projects, reusable prompt structures helped maintain a shared identity across outputs.

Limits That Make the Experience More Real

Acknowledging constraints makes the workflow more trustworthy.

- Quality varies between generations

Strong results are common, but not guaranteed on the first try.

- Input clarity matters

Vague prompts lead to generic outputs. The system reflects the signal you provide.

- Vocals can expose weaknesses

Dense or rhythmically awkward lyrics sometimes need rewriting to sound natural.

These limits don’t reduce value—they define how to collaborate with the tool effectively.

Who This Way of Working Fits Best

From what I’ve seen, this approach resonates most with:

- writers who think in language before sound

- creators producing music for video or digital media

- teams needing fast musical direction

- producers looking for idea generation outside familiar habits

It’s especially useful when momentum matters more than perfection.

A Different Conclusion

The real shift ToMusic.ai introduces isn’t automation—it’s timing. You hear ideas earlier, while they’re still easy to change. Once an idea is audible, you can judge it honestly. And honest judgment is what leads to better creative outcomes.

When text turns into sound quickly, music creation stops feeling like a wall you have to climb. It becomes a conversation—one where intention speaks first, and structure follows.